Repost from: https://marketingland.com/are-social-platforms-finally-growing-a-conscience-246210

I’d imagine Facebook now looks back fondly on 2016, when their greatest PR disaster involved reporting incorrect video metrics. This year, by contrast, has seen them plagued by negative headlines and thrown into the spotlight of not just the media industry, but the whole world.

Allegations of foreign interference in elections, massive data breaches, concerns about content on the platform and its impact on people’s mental health barely scratch the surface of growing public concern around social platforms. Major players now have no choice but to stand up and take action.

Here, we look at efforts made across Facebook, Instagram, Twitter and Snapchat to address several widespread issues that are impacting all users of social media today, including marketers seeking positive environments in which to connect with customers and prospects.

Privacy

Data privacy isn’t a new concept, but the scandal surrounding Cambridge Analytica illegally obtaining data on millions of Facebook users without their permission turned it into a hot topic. As digital marketers, it’s easy to assume that people know that there’s a trade-off when they use a social platform for free — that the company’s business is sustained by gathering their data and selling it to advertisers. But that will no longer cut it; people want to know exactly what information about themselves is being shared, and with whom.

Since the scandal broke, Facebook has greatly limited the amount of user data now available to apps and instituted a more comprehensive review process for app developers. It also launched a tool that allows users to delete apps in bulk from their Facebook accounts.

Earlier this year they also removed Partner Categories as targeting option for advertisers, which were built on 3rd party data from providers such as Experian and Oracle. This isn’t the type of data under the most scrutiny — no illegal use of this type of data has been made public — and the move has caused frustration among brands and agencies alike that what was a highly effective targeting option is now much more difficult to access. It is still accessible, after all, but Facebook just removed itself from the data-broker role and put the onus on advertisers to develop direct relationships with those data providers.

All-in-all it’s something of an empty gesture with no real impact on how people’s data is being used, but when you’re looking down the barrel of the biggest PR disaster in your company’s history, drastic steps have to be taken.

Facebook was fined £500,000 by Britain’s data watchdog (the maximum amount allowable) for breaches of the Data Protection Act, but, had the breach occurred after the GDPR came into effect, the fine would have been closer to £2 billion. And while Q2 saw revenue and user growth decline, CEO Mark Zuckerberg assured us that this was to be expected. He has stated that they are investing so heavily in security and user privacy initiatives that it’s going to impact their profitability, and that user loss was expected in the wake of GDPR.

Facebook is a business and its primary goal will always be driving revenue, but all signs point to user privacy moving much higher up their priority list as consumers, and their representatives in government, make it clear that this needs to occur.

Fake news

The widespread proliferation of fake news during the 2016 US elections highlighted a key issue with social platforms – how much of what you see can you believe? Clickbait and bots are a long-running problem, but when “bad actors” were seen to have an impact on the democratic process of the most powerful nation on Earth, major social platforms began to take action.

Both Facebook and Twitter have imposed much stricter regulations on political ads, including labels for advertisers on behalf of political candidates, who must self-identify and be verified as located in the relevant country. (Google has also tightened its rules, unveiling a political ad transparency report and a library of political ads.)

For the 2018 mid-terms, foreign nationals are forbidden from targeting political ads to users in the US. And this demand for more transparency has spread beyond just political advertisers – users now have the ability to see every ad run from a particular Facebook page or Twitter handle, whether they fall into the category of users originally served the ad or not.

The last few months have seen a huge drive from Twitter to repair the damage done to their reputation by a hotbed of fake accounts and trolls.

They’ve removed 214% more “spammy” accounts in the last quarter year-on-year, and have also purged locked accounts. Although follower numbers for many well-known names have taken a hit as result, the moves have been greeted with universal praise as steps toward cleaning up the digital space.

Additionally, in a bid to minimize bot activity, they’ve disabled the ability for apps like Tweetbot and Twitterific to keep real-time steams or send push notifications, and they no longer allow simultaneous posts with identical content across multiple accounts (a problem for social teams managing multiple accounts under the same brand).

All of these moves speak to the fact that Twitter wants the engagement on its platform to be from real people, not machines.

Not all social platforms have had to take such drastic action though. Snapchat offers a different model than Facebook and Twitter where there is less opportunity for the wrong content to go “viral,” and fake news is unlikely to make it through the net. While much of the communication that happens on Snapchat occurs directly between users, the company’s Discover platform offers a curated collection of news from publishers that have entered into partnerships with the platform. Meanwhile, user-generated content promoted to non-subscribers in the Discover area is moderated by human reviewers.

The situation may change as Snapchat grows, puts more effort into the Discover platform and begins to allow the distribution of content outside of its walled garden, an effort it’s pursuing with its Story Kit API, announced in July, but the company has the benefit of learning from others’ lessons.

Digital wellness

There have been a number of reports analyzing the impact of social media on mental health. It can have a detrimental impact on self-esteem and personal connections, and it can provide a platform for bullying.

A recent Asurion survey found that, when asked how long they could live without certain items, most people cited the same amount of time for their mobile phones as they did for food and water. And social media is one of the reasons people say they couldn’t go longer than a day without these “must-haves,” with a Pew Research Center poll showing that a majority of users visit social media platforms daily, and quite a few visit multiple times a day.

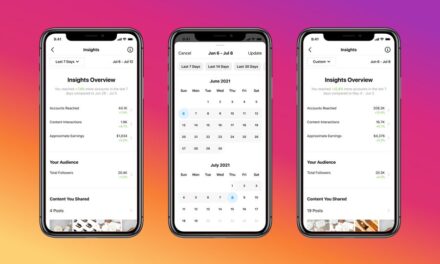

While it might seem counterintuitive for social platforms to try and make you spend less time on them, there is now pressure for them to ensure it’s time well spent. Facebook recently added a feature that allows users to see how much time they’re spending on the platform each day, and they can even set themselves a limit.

Instagram has new “You’re All Caught Up” notification on their News Feed encouraging people to take a break from endless scrolling – something I personally have found to cut my usage down considerably.

Meanwhile, Apple and Google are both integrating features into their mobile operating systems which are aimed at helping people get a handle on their usage. YouTube just this week rolled out a report in “Watch History” that lets users monitor how much time they’re spending on the platform, after previously releasing a feature that allows people to set a timer to remind them to take a break.

Content review policies

But even with less time spent online, the content to which users are exposed could still be doing damage. A recent Dispatches documentary put Facebook’s content review policies in a very damning light, with graphic violence and even child abuse deemed “suitable” to be left on the platform for public consumption.

While Facebook argued that some of the policies mentioned in the show were incorrect, many of their legitimate content review policies remain problematic. They continue to tread a fine line between regulating content to minimize hate speech and other damaging material, while continuing to allow free speech.

Facebook isn’t the only player to struggle in this arena. In the fourth quarter of 2017 alone, YouTube removed 8 million videos featuring violent or extremist content. More recently, it established new rules around which videos ads can be shown around.

With so many grey areas, it’s certainly not an easy job. But my feeling is the “free speech” excuse will no longer cut it when it comes to the proliferation of certain types of content. There is more to be done by the social giant to clean up its News Feed and make it a truly safe space.

Final thoughts

Any platform with millions of users who spend hours of their daily lives engaged has a responsibility to protect those users. Up to now, there have been too many instances of the major social networks shirking that responsibility, but we are now seeing an era of awakening, where the public is demanding more, and action is being taken. One can only hope this continues and the online ecosystem becomes a safer, less-polluted space for all concerned.